Implementing and Leveraging Machine Learning at State Departments of Transportation (2024)

Chapter: 5 Machine Learning Tools

CHAPTER 5

Machine Learning Tools

Introduction

This chapter provides an overview of various Machine Learning (ML) tools, categorized by the level of coding skills required to use them. The main goal of this chapter is to increase awareness among Department of Transportation (DOT) personnel of the ML tools and their capabilities. The sample ML tools presented here are expected to help DOTs better understand the wide range of options available for building and leveraging ML solutions for their specific needs and use cases.

The intention of this chapter is not to document an exhaustive list of ML tools as there are numerous ML tools available today and more are constantly being created. Furthermore, the ML pipeline includes data collection, cleaning, labeling, wrangling (transforming and structuring data from raw form into a desired format), management, visualization, and other tasks. Each of these tasks is critical to the success of ML projects, and there are specialized products and tools designed to facilitate these processes. However, discussing all these supporting tools is not within the scope of this chapter.

The selection of ML tools presented in this chapter is primarily informed by the authors’ personal experience and expertise in the field, supplemented by comprehensive web-based research. It is important to clarify that the authors, as well as the TRB, do not endorse or recommend the specific ML tools mentioned herein for use by DOTs. The intention is not to promote any products but rather to provide a broad overview of the types of tools available, both commercial and open source, and their potential applications in transportation-related projects.

Each transportation project entails unique challenges and requirements, and the suitability of a particular ML tool can vary significantly depending on the specific nature and demands of the project. Therefore, while the tools discussed in this chapter represent a cross section of what is currently available and widely used in the ML community, they are by no means the only options. DOTs are encouraged to conduct thorough research when selecting ML tools, considering factors such as compatibility with existing systems, ease of use, technical support, and scalability. Furthermore, the ML field is highly dynamic and rapidly expanding, which means new tools and improved versions of existing ones are continually being developed. DOTs should remain open to exploring and evaluating emerging technologies that might offer enhanced capabilities or better alignment with their specific project goals and operational contexts.

In general, “ML tools” refer to software applications, platforms, libraries, or frameworks that are used in building and deploying ML solutions. These tools facilitate various aspects of ML processes, from data preparation and analysis to model development, training, evaluation, and deployment. The term encompasses a wide range of functionalities and can be categorized into several types:

- Data Preprocessing Tools: These are used for cleaning, normalizing, transforming, and organizing raw data into a suitable format for ML. There are various code libraries prepared to facilitate data preprocessing and wrangling tasks (e.g., Pandas and NumPy in Python, dplyr, and data. table packages in R programming language).

- Machine Learning Libraries and Frameworks: These provide algorithms for building machine learning models. They include libraries like TensorFlow, PyTorch, Scikit-Learn, and Keras, which offer pre-built algorithms for tasks like regression, classification, clustering, and deep learning.

- Automated Machine Learning (AutoML) Tools: These tools automate the process of applying machine learning, making it more accessible to non-experts. They can automatically select the best algorithms and tune parameters. Examples include Google AutoML and H2O.ai.

- Model Deployment and Serving Tools: After a model is trained, these tools help in deploying the model into production environments where they can make predictions on new data. Examples include TensorFlow Serving, AWS SageMaker, and Microsoft Azure ML.

- Model Monitoring and Management Tools: These tools are used for monitoring the performance of machine learning models in production, managing their life cycle, and updating them as necessary. Examples include Kubeflow and MLflow.

- Development and Collaboration Tools: These include integrated development environments (IDEs), version control systems, and collaboration platforms that facilitate the development of machine learning models, such as Jupyter Notebooks, Google Colab, and GitHub.

- Visualization Tools: Tools like Matplotlib, Seaborn, and Tableau are used for visualizing data and the results of machine learning models, which is crucial for analysis and interpretation.

- Specialized ML Tools: These are tools designed for specific applications of machine learning, such as natural language processing (NLP), computer vision, or time series analysis.

The following sections provide concise descriptions of a sample of tools, with a focus on tools for model development and ML frameworks. It highlights their key functionalities and typical use cases. These descriptions are not meant to capture all their capabilities as some of these tools may continually be revised and updated. More up-to-date information can be found by visiting the respective websites. It should also be mentioned that some of these tools may become obsolete in the future as new and more effective tools are developed.

ML Tools Requiring Minimal or No Coding Skills

As ML technologies, and more generally AI, expand and permeate into various sectors, a plethora of ML tools targeting those with no coding skills have been created within the last few years, making it possible for a wider range of professionals to leverage the power of ML/AI. According to one study, by 2025, 70% of new applications developed by organizations will use low-code or no-code technologies, up from less than 25% in 2020 (Gartner 2021) The capabilities of these tools range from applying an already developed ML model through an intuitive user interface to training and development of new ML models and algorithms. These tools are expected to facilitate broader adoption of ML technologies as they will enable individuals and enterprises who may not have the skillset and resources to develop their ML capabilities.

A large set of ML/AI products and tools available today are targeting marketing, sentiment analysis, and new content creation (e.g., video, image, text). These types of products are based on “generative AI” methods that learn the underlying patterns, styles, or rules from the data they are trained on. Once trained, these tools can generate new content that resembles the training data but is original in its composition. For example, the popular ChatGPT by OpenAI can respond to complex queries and generate human-like text. While these types of ML tools have a broader range of applications, their applications to DOT operations are currently somewhat limited. However, in autonomous driving research, generative ML/AI models are playing an important role in creating synthetic data that mimics real-world driving scenarios, which is valuable for testing the safety and reliability of autonomous vehicles. These generative models can produce diverse, realistic traffic situations, weather conditions, and other variables that a self-driving car might encounter, providing a rich dataset for training and validating the vehicle’s motion control systems.

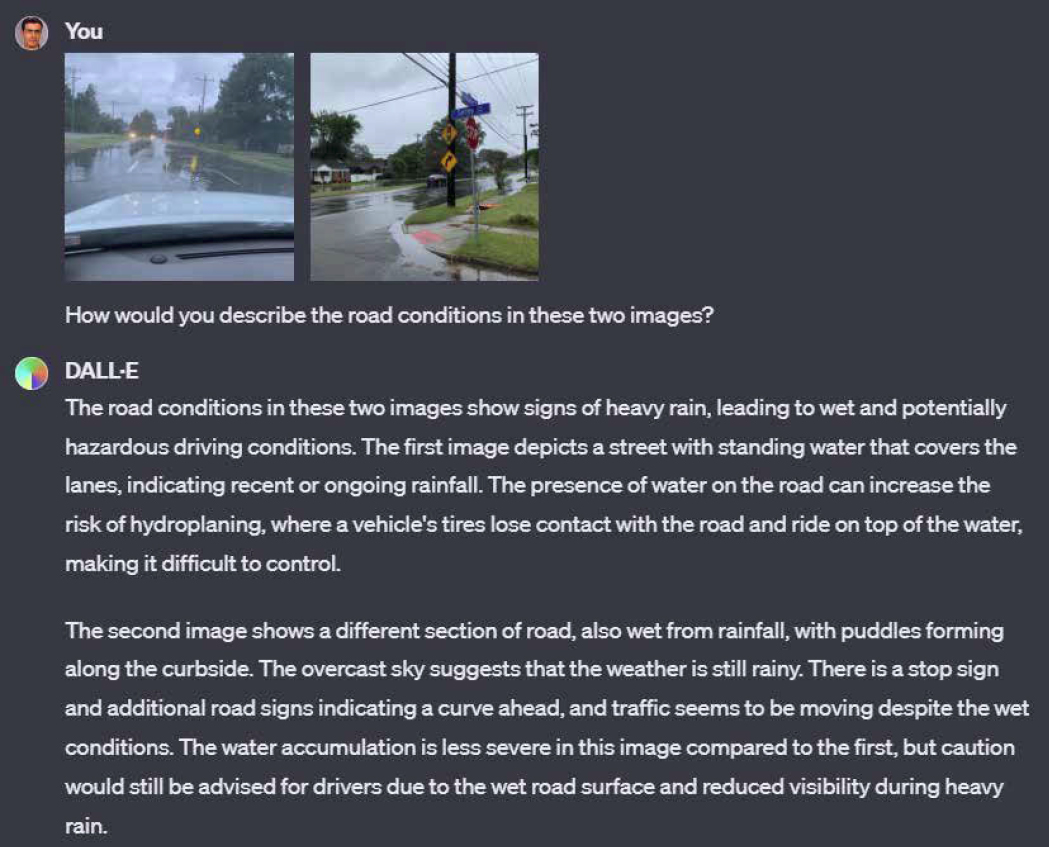

ChatGPT: ChatGPT is an advanced large language model (LLM) developed by OpenAI, based on the Generative Pre-trained Transformer (GPT) architecture. It can interpret and respond to complex queries and excels in understanding and generating human-like text, making it highly effective for a wide range of applications, including automated customer service, data analysis, summarizing documents and reports, and content creation. At the time of writing this report, GPT-4 Turbo was released which included several enhancements and new features including support for longer context length (equivalent to 300 pages of a book) and integration with text-to-speech and image generation models. In particular, from ChatGPT the image generation and interpretation model DALL-E can be accessed and leveraged for various applications. For example, in Figure 10 two sample images showing partial flooding on the road are uploaded to ChatGPT for analysis. The responses provided by DALL-E show that it can detect flooding on the road and provide an assessment of road conditions. In the future, such ML tools could potentially be customized and integrated into traveler information systems and traffic management applications. It should be noted that such general-purpose AI/ML tools could produce inaccurate results and may need to be re-trained and fully evaluated with large data before being considered for deployment.

Vertex AI: This AI toolkit produced by Google Cloud Services helps train models without code and with relatively low expertise required. It is designed to facilitate the development, deployment, scaling of ML

models, and customization of large language models (LLMs). AutoML in Vertex AI automates the selection of the best models based on the provided dataset and allows access to various open-source software (OSS) models.

Alteryx Machine Learning Platform: Alteryx ML Platform is a no-code AutoML product that offers a GUI for machine learning pipelines, education mode to teach analysts as they work, plugins for many common data source types, and analysis for score of data’s fitness for being used in machine learning algorithms.

Hugging Face: Hugging Face develops and shares tools for state-of-the-art Machine Learning models. It has a transformers library, a cutting-edge algorithm type primarily used in Natural Language Processing that powers applications such as ChatGPT, although they also can perform tasks like audio or image recognition. Hugging Face also offers high-quality datasets and models throughout the community.

Azure: Microsoft Azure platform offers a service for the development of models from training to deployment. The platform uses a “drag-and-drop” design which can help with building and testing various models in little time and less coding experience. Azure Cognitive Services includes a set of APIs for AI models for speech, language, vision, and decision-making. Text-to-lifelike speech, sentiment analysis, conversational language understanding, and content analysis using computer vision are among the services offered. Their OpenAI Service provides access to low-cost ready-to-use language models which can also be fine-tuned to the specific cases needed.

AWS: Amazon Web Services (AWS) offers a variety of tools for ML application development. Their SageMaker service enables easier training and deployment of ML models by providing detailed Jupyter Notebooks (interactive computing and data analysis notebook) which walk the user through the different stages of development. SageMaker also includes ways to prepare data at scale by facilitating collaboration among human labelers. Their “Data Wrangler” tool includes tools to import, prepare, transform, featurize, and analyze data and can offer insights into data quality. This can help lessen the burden associated with the most consuming part of model development, data preparation. A variety of other tools such as “AWS Marketplace” and “SageMaker Feature Store” are also available, which offer pre-trained models and feature management, respectively.

Roboflow, developed by the OpenCV team, is an in-browser, easy-to-use product that enables the development of computer vision models without significant programming knowledge. Roboflow is free to use if projects, including datasets, are open-sourced to contribute to the community.

Oracle AutoML: Oracle AutoML is designed for both experienced data scientists and those new to machine learning to automatically select the best machine learning algorithm for a given dataset and task and tune the hyperparameters for optimal performance. Being part of the Oracle ecosystem, it integrates with Oracle databases, allowing for data management and scalability.

Weka: Weka, short for Waikato Environment for Knowledge Analysis, is a suite of ML software written in Java. Developed by the University of Waikato in New Zealand, Weka includes a range of algorithms including those for preprocessing data, classification, regression, clustering, and association rules. Weka features a user-friendly graphical user interface that allows users to access its functionalities. This makes it suitable for beginners who are not familiar with programming. Weka is open source, which means it is freely available for use and modification.

H2O.ai: H2O.ai is an open-source platform for ML/AI, known for its scalability, ease of use, and performance. It’s designed to help data scientists and developers rapidly build and deploy ML/AI models. It offers a comprehensive suite of algorithms for tasks like regression, classification, clustering, and anomaly detection. It includes advanced methods like Gradient Boosting Machines (GBM), Random Forest, Deep Learning, and more. H2O.ai is user-friendly and offers interfaces for R, Python, Java, and a web-based GUI called Flow, making it accessible to a broad range of users with different skill levels.

Cognition Labs’ Devin: Designed by a startup named Cognition Labs, Devin uses generative AI/ML technologies to perform the functions of a software engineer. Released in March 2024, Devin is dubbed “the first AI software engineer” with capabilities such as passing engineering interviews, completing tasks

on the freelancing platform Upwork, building and deploying apps, and finding and fixing bugs. The user interacts with Devin through a chatbot, and the user can instruct Devin to train and fine-tune AI/ML models available on repositories like GitHub.

These types of tools that require minimal coding skills could be very valuable to DOTs and other transportation organizations which typically have limited skilled staff in code development and data science. These tools empower DOTs to update and refine ML models or even develop new ones without necessitating a deep understanding of ML intricacies and extensive coding proficiencies. This accessibility could significantly enhance their operational efficiency and innovation capacity, bridging the gap between technical challenges and practical solutions.

ML Tools Requiring Coding Skills

Various tools and frameworks have been developed to aid in the design, implementation, and deployment of ML models. However, many of these tools require a certain level of coding proficiency, making them more suitable for users with a background in programming or computer science. This section presents sample ML tools that necessitate coding skills, their main functionalities, applications, and the coding expertise required to effectively utilize them. From powerful libraries like TensorFlow and PyTorch, used for deep learning applications, to more specialized tools like scikit-learn for classical ML algorithms, this section discusses the diverse ecosystem of coding-centric ML tools, highlighting their unique features and the roles they play in the broader context of ML/AI.

Tensorflow: Tensorflow was introduced by Google to provide faster and more efficient computation for machine learning models. This has enabled easier processing on GPUs, making TensorFlow the computational infrastructure for other ML tools. TPUs (Tensor Processing Units) can also be used with code written in TensorFlow for further accelerating machine learning workloads. Tensorflow requires a relatively higher level of coding knowledge.

Keras: Keras is a high-level Python library built on top of the TensorFlow platform. It enables faster experimentation with different models through a simpler and more flexible API. Keras is primarily used to design deep neural networks and makes it easier to build different layer structures for ML models, which can facilitate development. Keras also offers many pre-trained models that can be easily implemented and fine-tuned for tasks such as NLP and computer vision.

NVIDIA NGC: NVIDIA NGC is a hub and platform for access to pre-trained AI/ML models and Jupyter Notebooks which include step-by-step instructions for various use cases (Hallak 2022). Their models include computer vision and Natural Language Processing (NLP) models trained for different use cases. They offer the TAO toolkit for fine-tuning existing models with processes such as data augmentation and iterative training and pruning. Their platform is accessible across multiple other cloud computing platforms such as Google Cloud and Amazon SageMaker. They have recently integrated their model shop inside Vertex AI to simplify deployment.

Gymnasium: Gymnasium (OpenAI Gym) is an open-source Python library that facilitates development by providing easier management of various reinforcement learning algorithms and run environments. This enables faster comparison between the models and easier modifications of models. It provides a variety of environments for testing and training RL agents, ranging from simple toy tasks to complex, real-world challenges. CleanRL is another Python library built on Gym API that is designed to help people new to the field by keeping the codebase simple and readable.

PyTorch: PyTorch is an open-source machine learning library developed by Facebook’s AI Research lab. PyTorch is the Python version of Torch, an open-source framework with a variety of tools and libraries for AI/ML development. PyTorch includes a range of pre-built modules and functions for building neural networks, which simplifies the development process and reduces the need to write boilerplate code.

PyTorch provides strong support for GPU acceleration using NVIDIA’s CUDA, enabling it to handle the computationally intensive tasks involved in training deep neural networks.

MXNet: Apache MXNet is a lightweight yet flexible and scalable deep learning framework that can be used on different processing units and machines. It has a NumPy-like programming interface which can help users familiar with that library start deep learning projects. Its memory efficiency and support for a variety of programming languages such as Python, Java, C++, JavaScript, and Julia make it a candidate for many portable devices.

OpenCV: Developed first by Intel, OpenCV is a free open-source Python library for computer vision that offers tasks such as camera calibration, object detection, and optical flow analysis. It is the most used library for processing images and videos.

Scikit-learn: This Python library is a community-driven project to help merge data analysis tools such as NumPy, Matplotlib, and SciPy and make simpler and more efficient predictive data analysis using traditional machine learning models such as SVM, random forest, and K-means. A variety of examples and community projects are available to learn how to apply these tools.

Caffe: This deep learning framework was developed by a team of scholars at Berkeley to help build fast and efficient models that can be used for real-time applications. They provide slides and tutorials for many applications including many academic research projects.

Github Copilot: Copilot uses natural language prompts to generate coding suggestions across dozens of languages. It takes advantage of OpenAI Codex and is trained on a billion lines of code hosted on GitHub. It can suggest individual lines and entire functions to help users write code quicker and with less effort.

Google Colab: A free Jupyter Notebook environment that runs entirely in the cloud. It allows users to write and execute Python code through their browsers. This means there’s no need for any local setup, making it accessible and convenient. Google Colab provides free access to Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs). Google Colab is a powerful tool but has limited session durations and restrictions on the usage of resources. For long-running computations or extremely resource-intensive tasks, a dedicated environment might be more suitable.

Apache Spark MLlib: Spark is an open-source product usable in Java, Scala, Python, and R designed to give an engine for large-scale data analytics. It can be run on a single machine but is capable of running parallel jobs on thousands of machine clusters. It has a machine learning library, Mllib, that includes many typical machine learning algorithms such as gradient boosting, clustering, and regression models.

Anaconda: Anaconda is a distribution of Python and R meant to simplify library management and deployment of data science projects. It comes installed with over 250 packages for scientific computing and includes several applications for easy development of data science code including Jupyter Notebooks, RStudio, Spyder, and Visual Studio Code.

IBM Watson Studio: Watson Studio provides a collaborative platform for data scientists to build, train, and deploy ML models. It brings together open-source frameworks like PyTorch, TensorFlow, and scikit-learn and allows the users to create models in languages such as Python, R, and Scala.

YOLO: YOLO, which stands for “You Only Look Once,” is a popular algorithm used for object detection in images and videos. It represents a significant advancement in computer vision technology, particularly in the field of real-time object detection (e.g., detecting vehicles and pedestrians in a video). YOLO is available as open-source software, which has allowed a wide range of developers and researchers to use and modify it for various applications. Like other deep learning models, YOLO requires a substantial amount of labeled training data to learn accurate object detection. It is trained on large datasets like COCO (Common Objects in Context) and PASCAL VOC. Since its introduction, there have been several versions of YOLO, each improving upon the last in terms of accuracy and speed. For example, YOLOv3 and YOLOv4 brought improvements in terms of detection accuracy and the ability to detect smaller objects. Pre-trained YOLO models could be integrated into various applications without the need to create new ML models.

In the next chapter of this NCHRP report, two ML applications are provided to show how YOLO can be used directly within custom applications (in this case for detecting vehicles and stop signs). It should be noted that other than YOLO, there are many other pre-trained models and neural networks, mostly available as open-source codes. Some well-known models are listed below.

- Mask R-CNN (Region-based Convolutional Neural Network) is a state-of-the-art model for instance segmentation, which is the task of identifying object boundaries at the pixel level in an image. It’s an extension of Faster R-CNN, a model designed for object detection. Mask R-CNN has been widely used in various applications, including autonomous vehicles, robotics, and medical image analysis, where precise object localization is crucial.

- ResNet (Residual Networks): Developed by Microsoft, ResNet has a deep network architecture (up to 152 layers) and is primarily used for image classification. It introduced residual learning to ease the training of these very deep networks.

- VGGNet: Developed by the Visual Graphics Group at Oxford, a feature of VGGNet is its simplicity, using only 3x3 convolutional layers stacked on top of each other in increasing depth. It’s widely used for image recognition tasks.

- Inception (GoogleNet): Known for its efficiency in terms of computation and memory usage, Inception has optimized the performance of deep neural networks on computer vision tasks.

- BERT (Bidirectional Encoder Representations from Transformers): Developed by Google, BERT has revolutionized the field of natural language processing (NLP). It uses the transformer architecture to process words in relation to all the other words in a sentence, rather than one by one in order.

- SSD (Single Shot MultiBox Detector): This is a model for detecting objects in images using a single deep neural network. Its features are its speed and efficiency, making it suitable for real-time object detection tasks.

- MobileNets: Developed by Google, these are small, low-latency, low-power models designed to bring ML models to mobile devices.